Let’s look at one more interesting thing. I mentioned before that one of the purposes of this example was to examine and visualize our eigenfaces, as they are called: our super columns. I will not disappoint. Let’s write some code that will show us our super columns as they would look to us humans:

def plot_gallery(images, titles, n_row=3, n_col=4): “””Helper function to plot a gallery of portraits””” plt.figure(figsize=(1.8 * n_col, 2.4 * n_row))

plt.subplots_adjust(bottom=0, left=.01, right=.99, top=.90, hspace=.35)

for i in range(n_row * n_col):

plt.subplot(n_row, n_col, i + 1) plt.imshow(images[i], cmap=plt.cm.gray) plt.title(titles[i], size=12)

# plot the gallery of the most significative eigenfaces eigenfaces = pca.components_.reshape((n_components, h, w))

eigenface_titles = [“eigenface %d” % i for i in range(eigenfaces.shape[0])]

plot_gallery(eigenfaces, eigenface_titles)

plt.show()

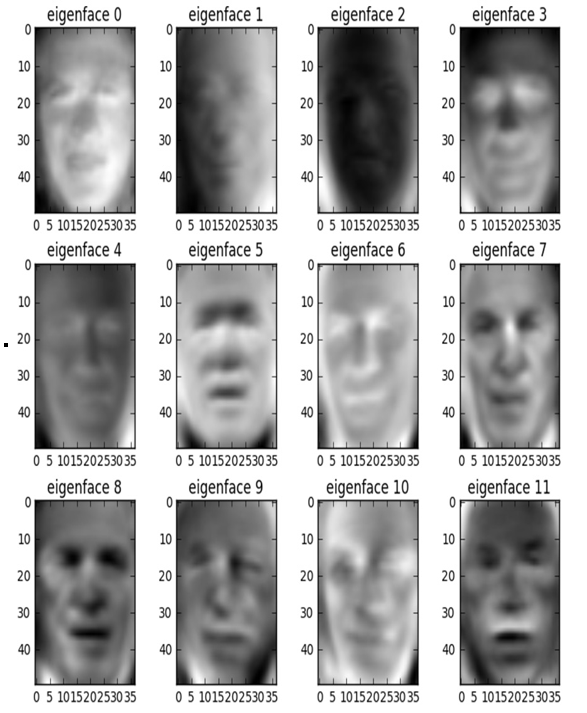

Warning: the faces in Figure 11.23 are a bit creepy!

Figure 11.23 – Performing PCA on the pixels of our faces creates what is known as “eigenfaces” that represent features that our classifiers look for when trying to recognize faces

Wow! A haunting and yet beautiful representation of what the data believes to be the most important features of a face. As we move from the top left (first super column) to the bottom, it is actually somewhat easy to see what the image is trying to tell us. The first super column looks like a very general face structure with eyes and nose and a mouth. It is almost saying “I represent the basic qualities of a face that all faces must have.” Our second super column directly to its right seems to be telling us about shadows in the image. The next one might be telling us that skin tone plays a role in detecting who this is, which might be why the third face is much darker than the first two.

Using feature extraction UL methods such as PCA can give us a very deep look into our data and reveal to us what the data believes to be the most important features, not just what we believe them to be. Feature extraction is a great preprocessing tool that can speed up our future learning methods, make them more powerful, and give us more insight into how the data believes it should be viewed. To sum up this section, we will list the pros and cons.

Here are some of the advantages of using feature extraction:

- Our models become much faster

- Our predictive performance can become better

- It can give us insight into the extracted features (eigenfaces)

And here are some of the disadvantages of using feature extraction:

- We lose some of the interpretability of our features as they are new mathematically derived columns, not our old ones

- We can lose predictive performance because we are losing information as we extract fewer columns

Let’s move on to the summary next.

Summary

Our exploration into the world of ML has revealed a vast landscape that extends well beyond the foundational techniques of linear and logistic regression. We delved into decision trees, which provide intuitive insights into data through their hierarchical structure. Naïve Bayes classification offered us a probabilistic perspective, showing how to make predictions under the assumption of feature independence. We ventured into dimensionality reduction, encountering techniques such as feature extraction, which help overcome the COD and reduce computational complexity.

k-means clustering introduced us to the realm of UL, where we learned to find hidden patterns and groupings in data without pre-labeled outcomes. Across these methods, we’ve seen how ML can tackle a plethora of complex problems, from predicting categorical outcomes to uncovering latent structures in data.

Through practical examples, we’ve compared and contrasted SL, which relies on labeled data, with UL, which operates without explicit guidance on the output. This journey has equipped us with a deeper understanding of the various techniques and their appropriate applications within the broad and dynamic field of data science.

As we continue to harness the power of these algorithms, we are reminded of the importance of selecting the right model for the right task—a principle that remains central to the practice of effective data science.