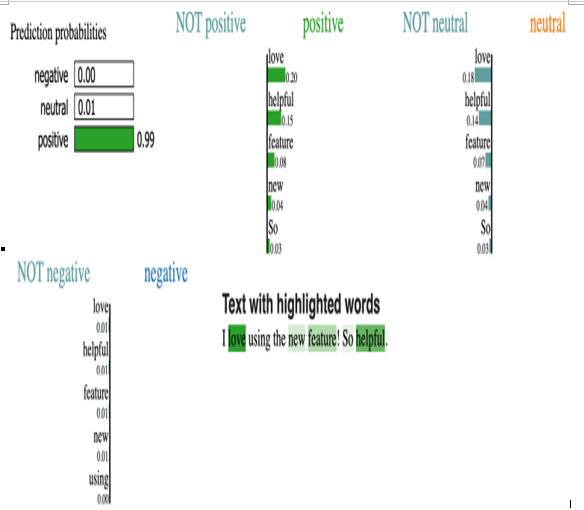

We can then see the visual interpretation (Figure 14.1) of which tokens impact the final decision the most:

# Sample tweet to explain

tweet = “I love using the new feature!

So helpful.”

# Generate the explanation

exp = explainer.explain_instance(tweet, predictor, num_features=5, top_labels=3)

exp.show_in_notebook()

In this case, num_features determines how many features the explainer should use to describe the prediction. For instance, if it is set to 4, LIME will provide explanations using up to four features that influence the prediction the most. It helps to simplify the explanation by focusing only on the top n features that have the most influence on the prediction rather than considering all features. top_labels determines how many of the most probable labels you’d like explanations for, and this is usually for multi-class classification. For instance, if you set it to 1, LIME will only explain the most probable label. If set to 2, it will explain the two most probable labels, and so on. Figure 14.1 shows what the output would be for this case:

Figure 14.1 – Here, the tokens that most affect the decision are “love,” “helpful,” and, surprisingly, “new” and “feature”

Interestingly, in our example, the tokens new and feature contribute greatly to the prediction of positive. In terms of governance, this is a great example of illuminating ML’s development and implications transparently. Let’s test this out by writing a statement that we believe should be negative:

# Sample tweet to explain for negative

tweet = “I hate using the new feature!

So annoying.”

# Generate the explanation

exp = explainer.explain_instance(tweet, predictor, num_features=5, top_labels=3)

exp.show_in_notebook()

We obtain the following results in Figure 14.2:

Figure 14.2 – Our sentiment classifier correctly classifies our negative statement

Let’s try one more example where we’d expect a neutral statement:

# Sample tweet to explain for neutral (bias at work)

tweet = “I am using the new feature.”

# Generate the explanation

exp = explainer.explain_instance(tweet, predictor, num_features=5, top_labels=3)

exp.show_in_notebook()

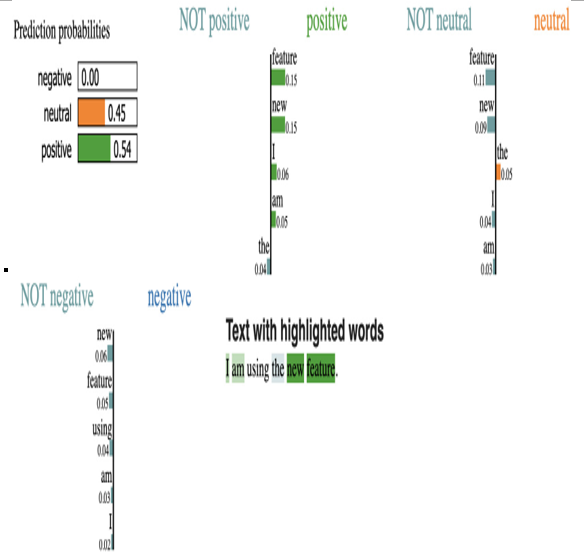

Figure 14.3 shows the resulting graph:

Figure 14.3 – Our neutral statement “I am using the new feature” comes out positive again, thanks to the phrase “new feature”

Fascinating! Our seemingly neutral statement “I am using the new feature” comes out mostly positive, and the tokens new and feature are the reasons why. To show this even further, let’s write one more neutral statement without saying “new feature”:

# Sample tweet to explain for neutral (bias at work)

tweet = “I am using the old feature.”

# Generate the explanation

exp = explainer.explain_instance(tweet, predictor, num_features=5, top_labels=3)

exp.show_in_notebook()

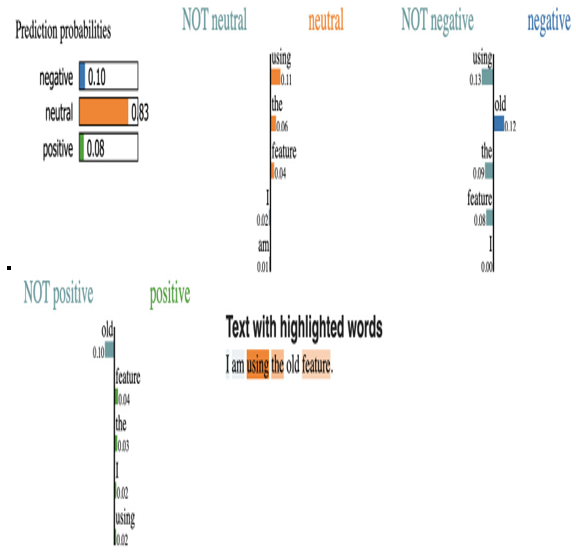

Let’s check the results in Figure 14.4:

Figure 14.4 – Without “new feature,” the classifier performs as expected

From a governance standpoint, this is an opportunity for classification improvement, and with the help of LIME, we have identified a clear token pattern that leads to inaccuracies. Of course, finding these kinds of patterns one by one can become a tedious process, but sometimes, this is the nature of the iterative development of ML. We can deploy these tools in the cloud to run automatically, but, at the end of the day, the solutions here are to have better quality training data and update our model.